What is qwen-image-edit

Qwen-Image-Edit is an open-source AI-powered image editing foundation model developed by Alibaba's Qwen team. It is built upon the 20B parameter Qwen-Image generative model and extends unique text rendering capabilities to image editing, enabling precise editing of Chinese and English text within images. The model offers advanced semantic editing and appearance editing functionalities, aiming to simplify image content creation and modification.

How to use qwen-image-edit

- Install Dependencies: Use pip to install necessary libraries:

pip install git+https://github.com/huggingface/diffusers. - Load Model: Load the Qwen-Image-Edit pipeline using PyTorch. For example:

pipe = QwenImageEditPipeline.from_pretrained("Qwen/Qwen-Image-Edit")and move it to CUDA if available. - Edit Images: Provide an input image and a prompt describing the desired changes. Execute the pipeline with parameters like

num_inference_stepsandtrue_cfg_scaleto generate the edited image.

Features of qwen-image-edit

- Semantic Editing: High-level content and style modifications, character consistency, viewpoint and pose transformations, and style transfer (e.g., Ghibli animation style).

- Appearance Editing: Precise local region editing, element addition/removal, background replacement, and color/attribute adjustments.

- Text Editing: Precise editing of Chinese and English text, preserving original font styles, multi-line layout support, and complex typography.

- Open Source: Released under the Apache 2.0 license, allowing for commercial use.

- Integration: Supports HuggingFace Spaces, ComfyUI nodes, and Alibaba Cloud ModelScope API.

Use Cases of qwen-image-edit

- Modifying image content and style through natural language prompts.

- Precisely editing text within images, including Chinese and English.

- Creating and modifying characters while maintaining consistency.

- Replacing backgrounds or adding/removing elements in images.

- Applying artistic styles to images.

FAQ

- Hardware Requirements: Minimum 8GB VRAM and 64GB system RAM for basic use; 12GB+ VRAM recommended for a smooth experience; 24GB+ VRAM for professional use.

- Commercial Use: Yes, it is free for commercial use under the Apache 2.0 license.

- Comparison to Traditional Tools: Offers AI-powered semantic understanding for complex edits via natural language, unlike manual pixel-based editing.

- Language Support: Supports precise text editing for both Chinese and English.

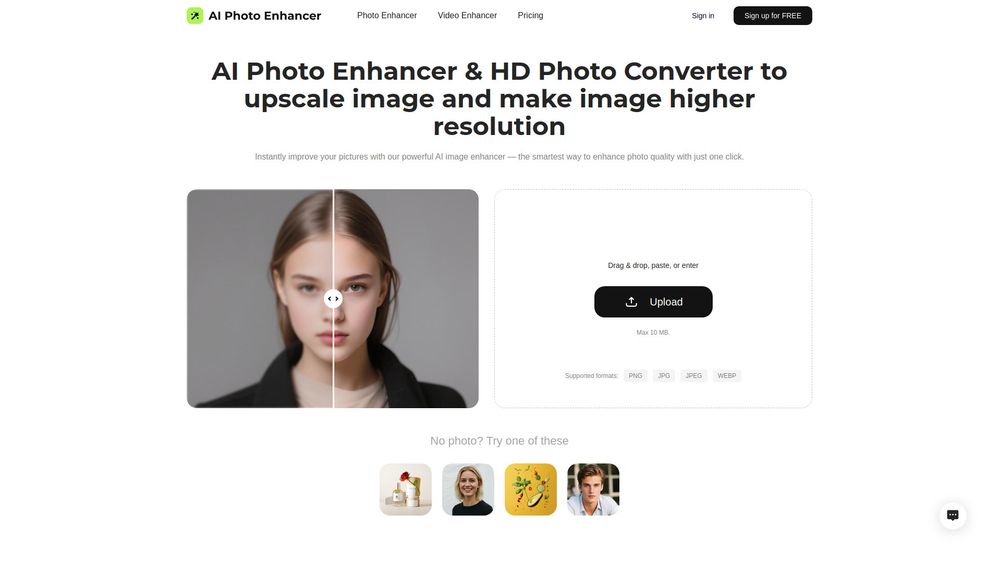

- Supported Formats: Input supports JPG, PNG, WebP; output supports JPG, PNG. Handles resolutions from 512px to 4096px.

- Integration: Can be integrated via HuggingFace Diffusers, ComfyUI nodes, and REST API.

- Batch Processing: Supported through API interfaces for processing multiple images simultaneously.

- Semantic vs. Appearance Editing: Semantic editing focuses on high-level content changes, while appearance editing targets pixel-perfect visual element modifications.

- Lower-End Hardware: Quantized versions and LoRA adaptations are available; online interfaces or cloud APIs are alternatives.